Humans have the ability to convey a wide range of emotions through complex and rich touch gestures. It is not the case with robotic devices.

This research explores the design space of device-initiated touch for conveying emotions with an interactive system reproducing a variety of human touch characteristics.

Video coming soon

This research project is conducted by Marc Teyssier, Gilles Bailly, Catherine Pelachaud and Eric Lecolinet.

Researchers are from Telecom ParisTech, HCI Sorbonne Université and CNRS.

General Approach

We study how humans perceive and interpret machine-produced touch in terms of emotions. Our overall approach consists of transposing emotional perceptual

experiments conducted in human-to-human interaction studies to machine-to-human interaction. This approach is frequently used in ECA literature and is developed in three steps, described below.

- The objective of this first step is to select the touch characteristics that will be explored in controlled experiments. Current technology limits the set of factors that can be implemented and we chose to use Amplitude, Velocity, Force, and Type of gesture.

- The next step was to conduct an experiment that investigated how humans perceive eight machine-generated touches to select certain touch parameters that tend to have distinct effects on the perception of emotions.

- The last step consists in investigating how context modulates the perception of machine-generated touch. Context is defined here by facial expressions of a virtual agent. This study brings us closer to examining the perception of emotions through multimodal signals, namely facial expressions, and text and touch in view of endowing emotional Virtual Agents with touching capabilities.

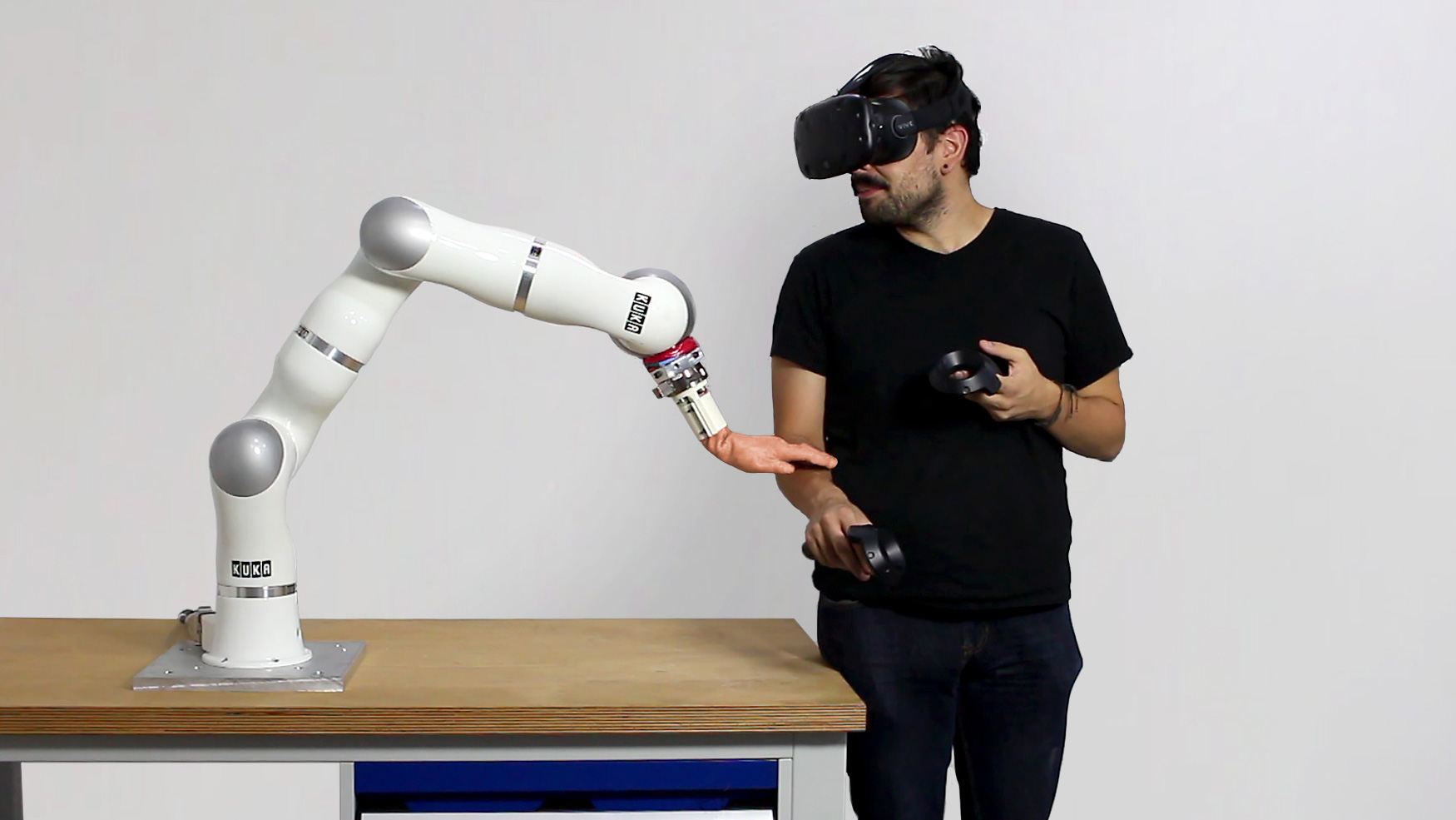

Device

In order to precisely control gestural actions, the device consists of a 7 degrees of freedom KUKA LWR4+ compliant robotic arm, originally designed for safety with collaborative robotics applications in mind. In particular, the repeatability of the positioning of the end-effector in 3D space allows us to precisely control the contact force reliably over time. Inspired by prosthetic hands bionics, the robotic arm is augmented with a human silicone hand attached at its tip.

To reinforce the perception of being touched by a human the fingertip was covered with a thin layer of wax to resemble human skin.

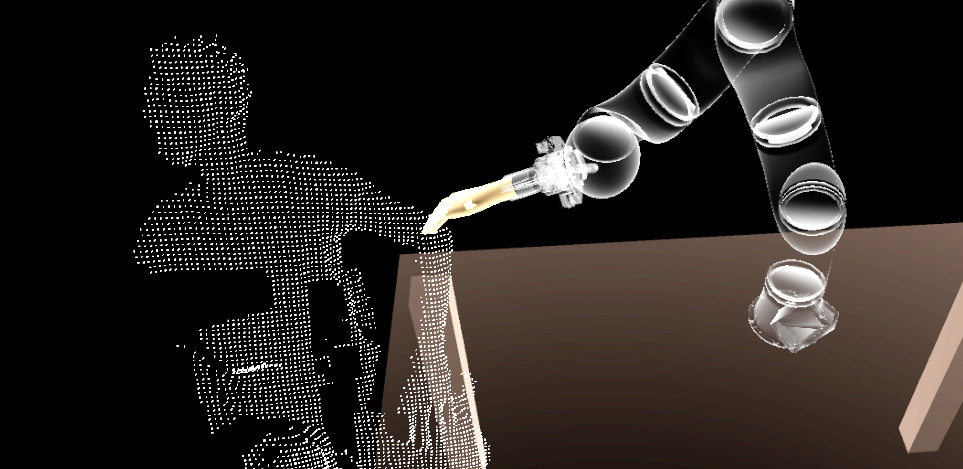

Focus on the tracking

The forearm of the participant was carefully tracked in order to follow its body topology to perform a real-time movement on the surface. To this extent, we used

a Microsoft Kinect V2 to track the location of the user’s arm and its anatomy to ensure the robot’s hand follows the user’s arm morphology, and to adapt the force intensity along her arm. The force is measured with a dedicated apparatus connected to the end-effector of the robot (ATI F/T Sensor Mini45).

Applications

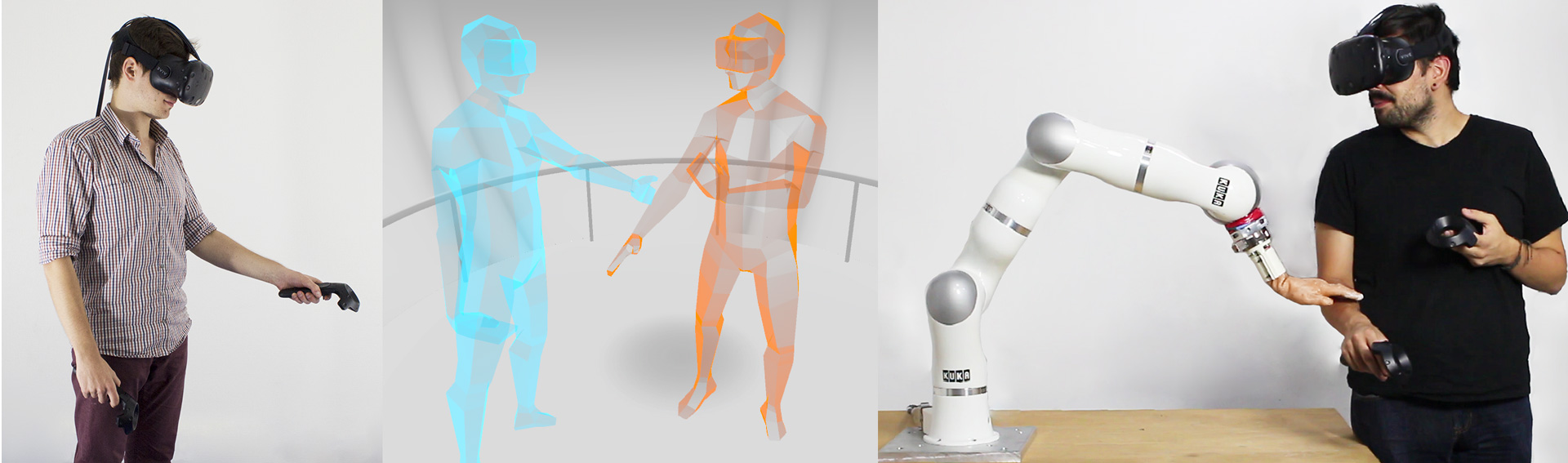

Improving communication between people

Human-to-Human mediated communication interfaces would benefit exploiting the touch channel to communicate emotions as it can reinforce and maintain bonds between people, convey one's emotional state or comfort the other one, etc. Video communication which takes advantage of non-verbal cues such as facial expression could benefit from touch stimuli to mirror real-life communication. Haptic feedback also helps increase immersion and presence in a co-located virtual environment. A remote user can mimic a stroke, the gesture is captured by the system and reproduced on the local user's arm with the robotic arm to increase immersion and presence.

Increasing realism of Robot-Human Interaction

In addition to communicating emotions through facial and body expression, Virtual Agents with touch capabilities can use this modality as a non-verbal cue.

Our findings combining touch factors and facial expressions give some insights on how to choose the proper combination of touch stimuli and their interaction with facial expressions and can be transposed to social robots to increase their expressiveness.

Publication

Conveying Emotions Through Device-Initiated Touch Marc Teyssier, Gilles Bailly, Catherine Pelachaud, Eric Lecolinet IEEE Transactions on Affective Computing; 07/2020 PDF ↵