Digital and virtual worlds mixed for productivity

Mixed reality offers an opportunity for natural interaction and performance. I explored this potential through several prototypes and user scenarios.

Several interactions techniques are used, including mid-air gestures, drawing or virtual manipulation of physical objects. The selected applications presented here were developed over the course of 2017, mainly with Unity3D.

ArLive

ArLive proposes a new approach in which users interact with all connected objects through explicit and simple programmable behaviour, transforming the multitude of decentralized applications to one unique application. To enable user customization, I used a simple visual-based programming feature and Augmented-Reality overlay.

ArLive reinforces the link between digital life to physical objects and provides ambient notifications within their environment. This research focuses on the ability of these smart objects to communicate their functionalities in order to operate real-time modifications thanks to an Augmented Reality see-through interface. It also deals with the usage of connected devices and how ArLive helps us living within a connected environment.

Publication

ArLive: Unified Approach of Interaction Between Users, Operable Space and Smart Objects Marc Teyssier, Grégoire Cliquet, Simon Richir, Virtual Reality International Conference, VRIC, Laval, France; 04/2015; 03/2015

DiveIn

DiveIn is a mobile application where the user works in virtual reality with remote collaborators. To create a true on-the-go and mobile virtual reality experience, we used the WearalitySky portable and fold-able virtual reality glasses.

This project was made with Xavi Benavides during my stay at MIT Media Lab Fluid Interfaces Group.

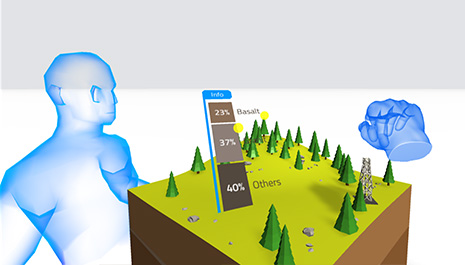

DiveIn links mobile Virtual Reality with collaborative work experience. The portable form factor of the VR goggles makes them the ideal tool to be carried on in the pocket. A Leap Motion hand tracker and an additional Myo Armband are used to interact with the virtual environment. By tracking both the arm and the hands, the interaction feels precise and natural.

The applications aim to make collaborative virtual reality experience common in our work process. While sharing the same virtual space, a simplified representation of the remote collaborator is present in the scene. The users can interact with the same elements and vocal speech communication foster collaboration.

We expanded this keys idea through several prototypes: On-site geological data analysis, learning of machinery and micro-biology exploration.

Multidraw

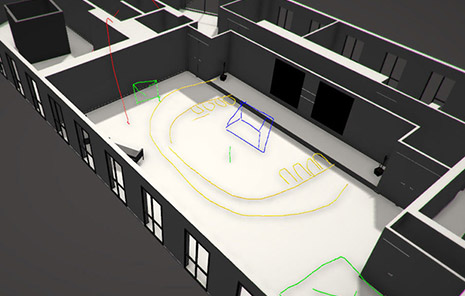

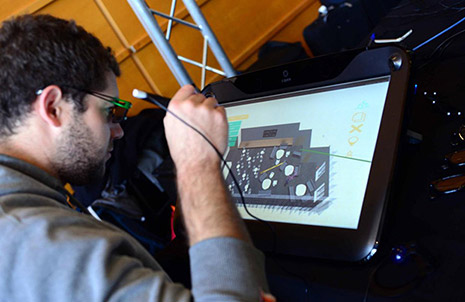

Multidraw is a mixed reality interior configuration tool. It works simultaneously with a ZSpace and a LScreen.

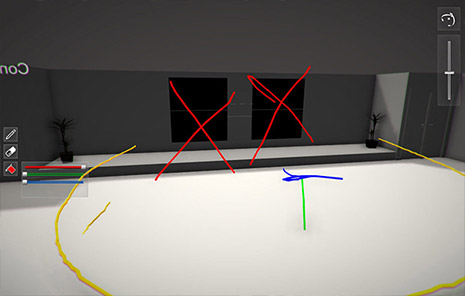

The goal of this project is to explore how furniture arrangement in physical spaces can be done in collaboration and in immersion, and also how this collaboration can be made through different devices.

The ZSpace is used to show a 3D virtual representation of the building where users can draw 3D annotations. These drawings are displayed in real-time in immersion in the LScreen. In immersion, the users can create and modify the furniture in the room, with the help of the drawn annotations.

This project was done with Corentin Fatus in partnership with the research institute B<>com. They pushed forward our concept to use it as a professional solution.