Artificial Skin Sensor for Human-Robot Interaction

The design of robotic skin sensors is often the results of a trade-off between technical capabilities and rarely considers human factors.

We propose a novel approach sensor for compliant Human-like artificial skin sensors for robots, with similar mechanical properties as human skin and capable of precisely detecting touch.

You can buy the robot skin Sensor hardware kit at https://muca.cc.

This is a research project made by Marc Teyssier, Brice Parilusyan, Anne Roudaut and Jurgen Steimle.

This artificial skin sensor is precise, robust, low cost, deformable, familiar and intuitive to use and that can be easily replicated by Robotics practitioners.

Design challenges

Physical Human-Robot-Interaction (pHRI) is beneficial for communication in social interaction or to perform collaboratively. Robotic devices embed sensors for this sole purpose however, these sensors are often the results of a trade-off between technical capabilities and rarely considers human factors. The development of this sensor followed several design principles.

- The tactile acuity (or resolution) of artificial skin should be high and allow to detection of complex tactile information, such as single touch or multi-touch finger pressure, location or shape.

- The artificial skin should be soft, comfortable to touch and have haptic properties similar to human skin.

- The geometry and materials of artificial skin should be compliant to fit the robot’s curved surfaces and cover large surfaces.

- The artificial skin should be low-cost and easy to fabricate in order to foster replication and widen its use within the robotic community.

Sensing Principle

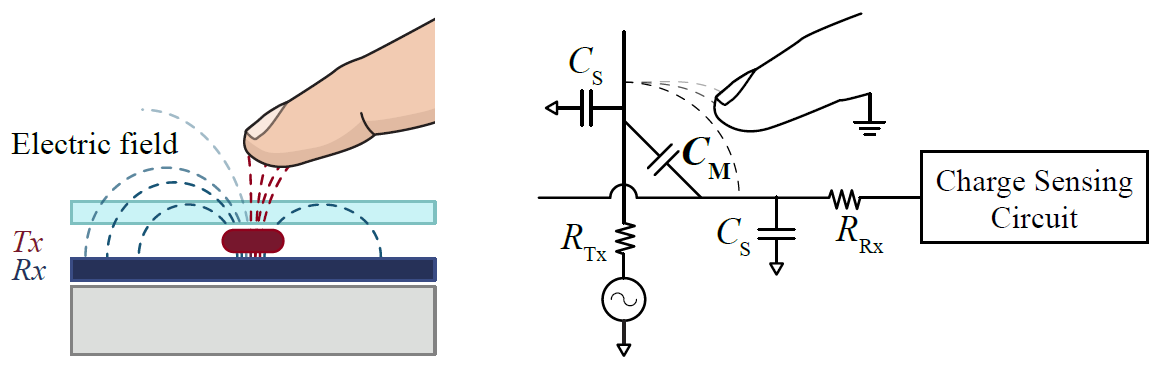

The sensing principle is based on projected mutual capacitance, which is the industry standard for multi-touch sensors. This technology identifies touch by measuring the local change in capacitance on a grid array of conductive traces (or electrodes). At each electrode cross-section, a capacitor is formed. When a human finger gets close, capacitance coupling between the two electrodes is reduced as the electric field between them is disturbed by the finger . To measure the capacitance of the whole surface, we sequentially apply an excitation signal to each Tx electrode while Rx measures the received signal.

Sensor Fabrication

The sensor relies on the use of different silicone elastomers to replicate the human skin layers and comprises an embedded electrode matrix to perform mutual capacitance sensing. This fabrication process has the advantage to encapsulate the sensing electrodes between different skin layers, ensuring robustness and durability.

You can buy the sensor open-source hardware kit on https://muca.cc

Characterization

We performed a series of characterization of a single sensing capacitor and the touch processing algorithm on the entire

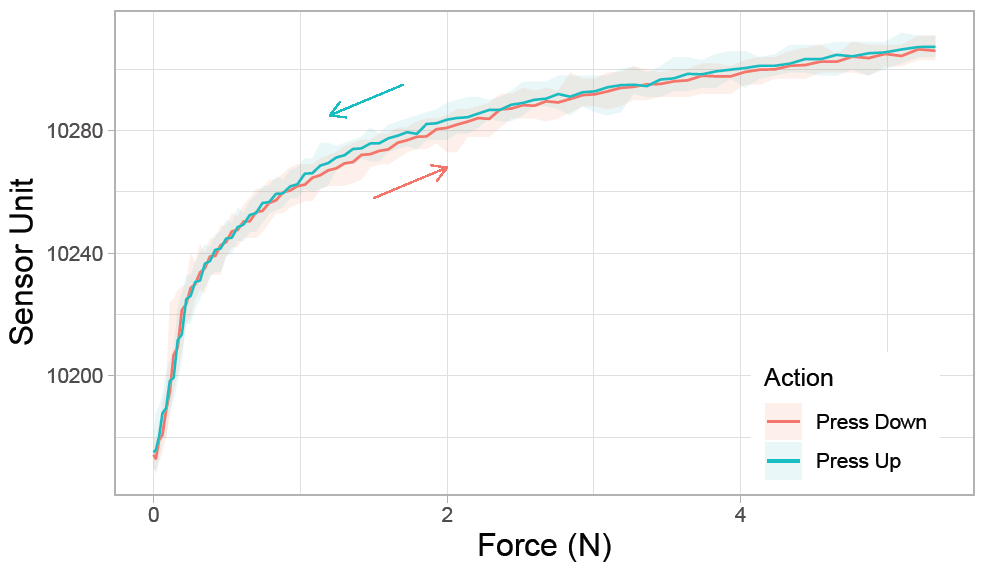

- Response to force. The sensor response to force follows a logarithmic curve. Our initial results show a low hysteresis: we measured a negligible difference of 2 measurement units between the press down and up.

- Signal-to-noise ratio. The sensor allows a change of hardware sensitivity. The sensor SNR range from 60dB to 25dB.

- Tactile Acuity. The signal processing pipeline provides a 0.5 mm spatial acuity (standard deviation = 0.2 mm).

More information

This project is published at the IEEE International Conference on Robotics and Automation 2021.

For any request or questions, please reach me via email contact@marcteyssier.com or Twitter @marcteyssier.

This research was conducted at Saarland University Human-Computer Interaction Lab and De Vinci Innovation Center. This project received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement 714797 ERC Stg InteractiveSkin) the Engineering and Physical Sciences Research Council EPSRC EP/P004342/1.

Publication

Human-Like Artificial Skin Sensor for Physical Human-Robot Interaction Marc Teyssier, Brice Parilusyan, Anne Roudaut and Jürgen Steimle In proceedings of the IEEE International Conference on Robotics and Automation; 06/2021 PDF ↵